Out of the box there's no solution to keep the original source values of ModifiedOn and ModifiedBy when migrating data records to Dynamics 365. In an on-premises deployment you could accomplish the task by the unsupported query update on the destination database; but if you are migrating to cloud Dynamics 365 database access is not an option. In this article I'll walk you through step-by-step guide of one solution proven to work in migration projects. The migration scenario is to move data from an older Dynamics CRM system to online Dynamics 365/CRM; but this could work for other data sources wishing to migrate to Dynamics 365/CRM.

The steps are:

1. Create temporary fields in destination entity to hold the source values of ModifiedOn and ModifiedBy.

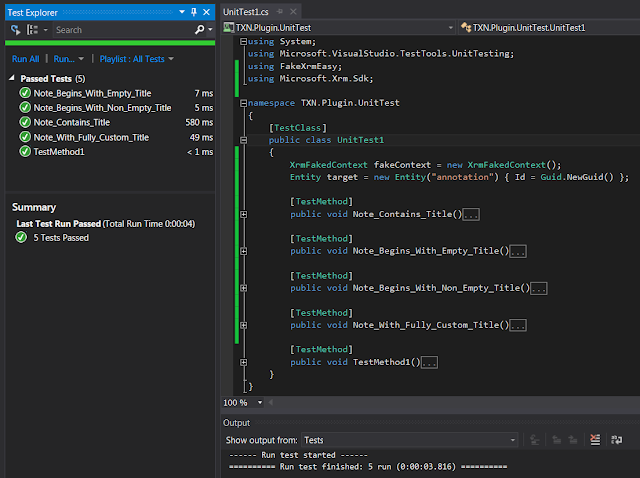

2. Write plug-in to set ModifiedOn/By values of destination entity.

3. Register the plug-in.

4. Map source fields to temporary fields and run the migration/import

Step 1: Create 2 temp fields new_ModifiedOn and new_ModifiedBy in the destination environment. Whatever method/tool of migration you will use, the source ModifiedOn will set value for destination new_ModifiedOn; and source ModifiedBy will set value for destination new_ModifiedBy.

Step 2: Write plug-in to set value of destination ModifiedOn and ModifiedBy. Core code for the plug-in is at the end.

Step 3: Register the plug-in, on create message and Pre-Operation.

Step 4: Map the fields for migration/import, like I mentioned in Step 1 - source ModifiedOn to destination new_ModifiedOn, and ModifiedBy to new_ModifiedBy

Now if you run the migration/import, the plug-in will pickup the original values in temp fields and set them for ModifiedOn/ModifiedBy upon creation of new record.

Here is my plugin:

using System;

using Microsoft.Xrm.Sdk;

using Microsoft.Xrm.Sdk.Query;

namespace TXN.Plugin.PreserveRecordInfo

{

public class PreCreateRecord : IPlugin

{

#region Secure/Unsecure Configuration Setup

private string _secureConfig = null;

private string _unsecureConfig = null;

public PreCreateRecord(string unsecureConfig, string secureConfig)

{

_secureConfig = secureConfig;

_unsecureConfig = unsecureConfig;

}

#endregion

public void Execute(IServiceProvider serviceProvider)

{

ITracingService tracer = (ITracingService)serviceProvider.GetService(typeof(ITracingService));

IPluginExecutionContext context = (IPluginExecutionContext)serviceProvider.GetService(typeof(IPluginExecutionContext));

IOrganizationServiceFactory factory = (IOrganizationServiceFactory)serviceProvider.GetService(typeof(IOrganizationServiceFactory));

IOrganizationService service = factory.CreateOrganizationService(context.UserId);

if (context == null) throw new InvalidPluginExecutionException("IPluginExecutionContext is null");

if (service == null) throw new InvalidPluginExecutionException("IOrganizationService is null");

if (tracer == null) throw new InvalidPluginExecutionException("ITracingService is null");

tracer.Trace("TXN: message: " + context.MessageName + " | Timestamp: " + DateTime.Now);

if (context.InputParameters.Contains("Target") && context.InputParameters["Target"] is Entity)

{

Entity entity = (Entity)context.InputParameters["Target"];

string _message = context.MessageName.ToUpper();

string _entityLogical = entity.LogicalName;

tracer.Trace("TXN: entity: " + entity.LogicalName + " | GUID: " + entity.Id.ToString());

//TXN: Filter the message and your entities here

if (!(_message == "CREATE" ) ||

!(_entityLogical == "knowledgearticle" ||

_entityLogical == "adobe_agreement" ||

_entityLogical == "phonecall" ||

_entityLogical == "fax" ||

_entityLogical == "letter" ||

_entityLogical == "appointment" ||

_entityLogical == "email" ||

_entityLogical == "task"))

return;

try

{

DateTime _initDate = new DateTime(2099, 12, 12);

DateTime _modifiedOn = _initDate;

string _modifiedBy = null;

if (entity.Contains("new_modifiedon") && entity.Attributes["new_modifiedon"] != null)

{

_modifiedOn = (DateTime)entity.Attributes["new_modifiedon"];

tracer.Trace("TXN: mapped new_modifiedon: " + _modifiedOn.ToString());

}

if (entity.Contains("new_modifiedbyname") && entity.Attributes["new_modifiedbyname"] != null)

{

_modifiedBy = (entity.Attributes["new_modifiedbyname"]).ToString();

tracer.Trace("TXN: mapped new_modifiedbyname: " + _modifiedBy);

}

//TXN: Set original modifiedon value in destination record

if(!_modifiedOn.Equals(_initDate))

{

entity.Attributes["modifiedon"] = _modifiedOn;

tracer.Trace("TXN: set modifiedon to: " + _modifiedOn.ToString());

}

//TXN: find new_modifiedbyname and set that user to destination record's modifiedby

if (_modifiedBy != null)

{

var fetchXml = @"<fetch version='1.0' output-format='xml-platform' mapping='logical' distinct='false'>

<entity name='systemuser'>

<attribute name='fullname' />

<attribute name='systemuserid' />

<filter type='and'>

<condition attribute='fullname' operator='eq' value='{0}' />

</filter>

</entity>

</fetch>";

fetchXml = string.Format(fetchXml, _modifiedBy);

EntityCollection users = service.RetrieveMultiple(new FetchExpression(fetchXml));

if (users != null && users.Entities.Count > 0)

{

entity.Attributes["modifiedby"] = users.Entities[0].ToEntityReference();

tracer.Trace("TXN: set modifiedby to user: " + users.Entities[0].GetAttributeValue<string>("fullname"));

}

}

}

catch (Exception e)

{

throw new InvalidPluginExecutionException(e.Message);

}

}

}

}

}